Configure a run#

Run submissions can be configured through a YAML file or using our Python API’s RunConfig class.

The fields are identical across both methods:

Field |

Type |

|

|---|---|---|

|

required |

|

|

required |

|

|

required |

|

|

required |

|

|

optional |

|

|

optional |

|

|

optional |

|

|

optional |

|

|

optional |

|

Here’s an example run configuration:

name: hello-composer

image: mosaicml/pytorch:latest

command: 'echo $MESSAGE'

compute:

cluster: <fill-in-with-cluster-name>

gpus: 0

scheduling:

priority: low

integrations:

- integration_type: git_repo

git_repo: mosaicml/benchmarks

git_branch: main

env_variables:

MESSAGE: "hello composer!"

from mcli import RunConfig

config = RunConfig(

name='hello-composer',

image='mosaicml/pytorch:latest',

command='echo $MESSAGE',

compute={'gpus': 0},

scheduling={'priority': 'low'},

integrations=[

{

'integration_type': 'git_repo',

'git_repo': 'mosaicml/composer',

'git_branch': 'main'

}

],

env_variables={'MESSAGE': 'hello composer!'},

)

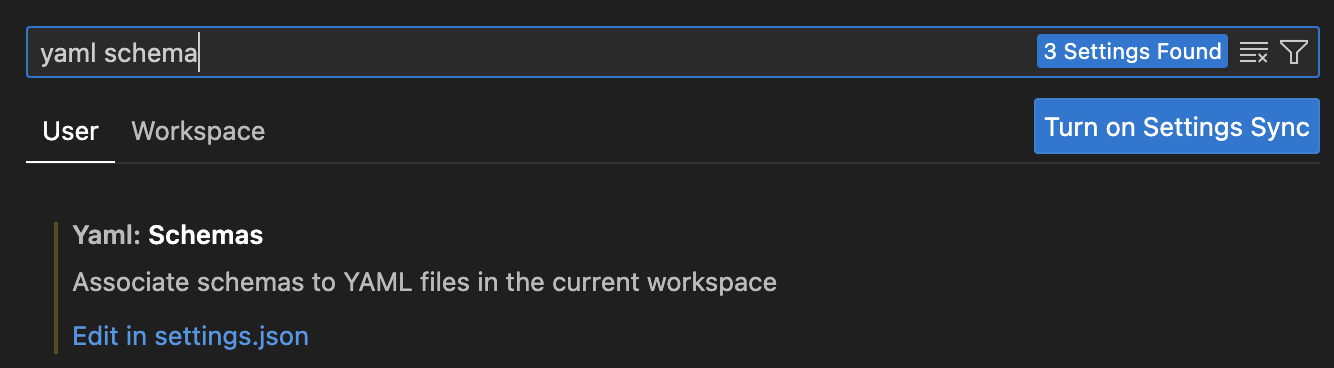

Setting up YAML Schema in VSCode#

Autocomplete suggestions and static checking for training YAML files can be supported using by using a JSON Schema in VSCode.

To configure this in your local VSCode environment:

To configure this in your local VSCode environment:

Download the YAML extension in VSCode.

Download the JSON Schema file

training.jsonhere.Go to Settings from Code → Preferences → Settings.

Search for

YAML Schemaand go toEdit in settings.json

Under

"yaml.schemas", add the code listed below. The key is a link to the JSON file specifying the YAML schema, and the value specifies what the kinds of YAML files that are targetted.

"yaml.schemas": {

"https://raw.githubusercontent.com/mosaicml/examples/melissa/yaml_schema/training.json": "**/mcli/**/*.yaml"

}

Restart VSCode.

Now,

CTRL + Spaceshould enable autocomplete for any file located inmcli/.

Field Types#

Run Name#

A run name is the primary identifier for working with runs.

For each run, a unique identifier is automatically appended to the provided run name.

After submitting a run, the finalized unique name is displayed in the terminal, and can also be viewed with mcli get runs or Run object.

Image#

Runs are executed within Docker containers defined by a

Docker image.

Images on DockerHub can be configured as <organization>/<image name>.

For private Dockerhub repositories, add a docker secret with:

mcli create secret docker

For more details, see the Docker Secret Page.

Using Alternative Docker Registries

While we default to DockerHub, custom registries are supported, see Docker’s documentation and Docker Secret Page for more details.

Command#

The command is what’s executed when the run starts, typically to launch your training jobs and scripts. For example, the following command:

command: |

echo Hello World!

will result in a run that prints “Hello World” to the console.

If you are training models with Composer, then the command field is where you will write

your Composer launch command.

Compute Fields#

The compute field specifies which compute resources to request for your run. We will try to infer which compute resources to use automatically. Which fields are required depend on which and what type of clusters are available to your organization. If those resources are not valid or if there are multiple options still available, an error will be raised on run submissions, and the run will not be created.

Field |

Type |

Details |

|---|---|---|

|

|

Typically required, unless you specify |

|

|

Required if you have multiple clusters |

|

|

Optional |

|

|

Optional. Only needed if the cluster has multiple GPU instances |

|

|

Optional. Typically not used other than for debugging small deployments. |

|

|

Optional. Alternative to |

|

‘List[str]` |

Optional. Names of the nodes to use in the run. You can find these via |

You can see clusters, instances, and compute resources available to you using:

mcli get clusters

For example, you can launch a multi-node cluster my-cluster with 16 A100 GPUs:

compute:

cluster: my-cluster

gpus: 16

gpu_type: a100_80gb

Scheduling#

The scheduling field governs how the run scheduler will manage your run.

It is a simple dictionary, currently containing one key: priority.

Field |

Type |

|

|---|---|---|

|

optional |

|

|

optional |

|

|

optional |

|

|

optional |

|

|

optional |

|

priority: Runs in the scheduling queue are first sorted by their priority, then by their creation time.

The priority field defaults to auto when omitted or used with unavailable options.

It can be overridden to low or lowest to allow other runs higher priority.

When using a reserved cluster, best practices usually dictate that large numbers of more experimental runs (think

exploratory hyperparameter sweeps) should be run at low priority to allow your teammates’ runs higher priority,

whereas important “hero” runs should be run at the default auto priority. When in a shared cluster, we recommend leaving the

default set to auto to schedule as fast as possible on the shared cluster

will queue above your own.

preemptible: If your run can be retried, you can set preemptible to True. Unless specified, all submitted runs are non-preemptible.

max_retries: This is the maximum number of times our system will attempt to retry your run.

retry_on_system_failure: If you want your run to be retried if it encounters a system failure, you can set

retry_on_system_failure to True

max_duration: This is the time duration (in hours) that your run can run for before it is stopped.

Integrations#

We support many Integrations to customize aspects of both the run setup and environment.

Integrations are specified as a list in the YAML. Each item in the list must specify a valid integration_type

along with the relevant fields for the requested integration.

Some examples of integrations include automatically cloning a Github repository, installing python packages, and setting up logging to a Weights and Biases project are shown below:

integrations:

- integration_type: git_repo

git_repo: org/my_repo

git_branch: my-work-branch

- integration_type: pip_packages

packages:

- numpy>=1.22.1

- requests

- integration_type: wandb

project: my_weight_and_biases_project

entity: mosaicml

You can read more about integrations on the Integrations Page.

Some integrations may require adding secrets. For example, pulling from a private github repository would require the git-ssh secret to be configured.

See the Secrets Page.

Environment Variables#

Environment variables can also be injected into each run at runtime through the env_variables field.

For example, the below YAML will print “Hello MOSAICML my name is MOSAICML_TWO!”:

name: hello-world

image: python

command: |

sleep 2

echo Hello $NAME my name is $SECOND_NAME!

env_variables:

NAME: MOSAICML

SECOND_NAME: MOSAICML_TWO

The command accesses the value of the environment variable by the key (in this case $NAME and $SECOND_NAME).

Do not use for sensitive environment variables

This configuration is not intended to be used for sensitive environment variables such as api keys, tokens, or passwords. Please configure these environment variables via environment variable secrets

Parameters#

The provided parameters are mounted as a YAML file of your run at /mnt/config/parameters.yaml for your code to access.

Parameters are a popular way to easily configure your training run.

Metadata#

Metadata is meant to be a multi-purposed, unstructured place to put information about a run. It can be set at the beginning of the run, for example to add custom run-level tags or groupings:

name: hello-world

image: bash

command: echo 'hello world'

metadata:

run_type: test

Metadata on your run is readable through the CLI or SDK:

> mcli describe run hello-world-VC5nFs

Run Details

Run Name hello-world-VC5nFs

Image bash

...

Run Metadata

KEY VALUE

run_type test

from mcli import get_run

run = get_run('hello-world-VC5nFs')

print(run.metadata)

# {"run_type": "test"}

You can also update metadata when the run is running, which can be helpful for exporting metrics or information from the run:

from mcli import update_run_metadata

run = update_run_metadata("hello-world-VC5nFs", {"run_type": "test_but_updated"})

print("New metadata values:", run.metadata)